Journal of Neurology and Psychology

Download PDF

Research Article

*Address for Correspondence: Prochnow D, Department of Neurology, Heinrich-Heine University Düsseldorf, Moorenstr. 5, 40225 Düsseldorf, Germany, Tel: +49-211-8117889; Fax: +49-211-18485; E-mail: prochnowdenise@gmail.com

Citation: Prochnow D, Steinhäuser L, Brunheim S, Seitz RJ. Differential Emotional State Reasoning in Young and Older Adults: Evidence from Behavioral and Neuroimaging Data. J Neurol Psychol. 2014;2(1): 8.

Copyright © 2013 Prochnow D, et al. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Journal of Neurology and Psychology | ISSN: 2332-3469 | Volume: 2, Issue: 1

Submission: 09 December 2013 | Accepted: 20 January 2014 | Published: 25 January 2014

Reviewed & Approved by: Dr. Cecilia Cheng, Department of Psychology, University of Hong Kong, China

Inferring the intentions and emotions of others is fundamental in everyday social interactions. Body language and especially facial expressions have been ascribed key roles in understanding the mindset of other people by virtue of theory of mind (ToM) and empathy [1,2]. More than all other parts of the body, the human face can produce differentiated movement patterns in rapid succession, thereby providing a powerful tool for communicating social information [1]. However, not all facial cues are equally relevant or profitable for a person. In fact, people are endowed with the capacity to differentiate highly from less relevant social information which allows them securing their own well-being as well as saving cognitive resources [3]. Specifically, happy and angry facial expressions represent highly relevant social messages which have immediate implications on the behavior of the observer (high social impact expressions). On the contrary, sad or fearful expressions (low social impact expressions) tell a lot about the sender but their behavioral consequences are vague because they are vitally not essential for the observer [3,4].

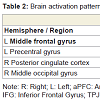

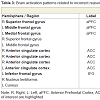

Correct reasoning about high impact emotional states led to a greater activation in the right middle occipital gyrus in the young relative to older adults, and an activation increase in the left middle temporal gyrus in the older relative to the young adults. Correct reasoning about emotional states of low impact was associated with stronger activations in the left aPFC, the left premotor cortex, and the right posterior cingulate cortex in the young relative to the older adults (Table 2). The older adults showed stronger activation in the right transverse temporal gyrus compared to the young adults. Differences in the brain activations evoked during incorrect reasoning about high and low impact emotional states were only present in favor of the young adults as compared to the older adults (Figure 2). They comprised the aPFC, premotor cortex, ACC, IFG and the putamen (Table 3, Figure S1). Regions of interest during reasoning showed several correlations (Table S2). Activation of the right medial aPFC (32/52/18) activated during reasoning about less relevant emotional states correlated inversely with the older adults’ performance on the Eyes Test (p < 0.05). The less alexithymic the young adults were, the stronger was the activation in its left homologue (-25/55/18) during reasoning about low impact expressions (p < 0.05). Activation of a more inferior left aPFC region (-18/61/3) during reasoning about high impact emotional states correlated inversely with facial affect recognition abilities in the young and older adults (p < 0.05). Activation of the left IFG (-28/22/-15) during reasoning about less relevant expressions correlated with the degree of alexithymia in young adults (p < 0.05) and inversely with self-reported empathy and facial affect recognition in the older adults (p < 0.05). The better the older adults performed in the Eyes Test, the less activated was the right SDMFC during reasoning about low impact expressions (p < 0.05). In the young adults, activation of a right ACC cluster (5/19/39) that became active during reasoning about high impact emotional states correlated with reasoning performance (p < 0.01; Figure 2) and facial affect recognition abilities in the young adults (p < 0.05) but was inversely correlated with self-reported empathy in the older adults (p < 0.05, Figure 2). Activation of its left homologue (-4/16/39) also correlated with reasoning performance in the young adults (p < 0.01, Figure 2). In addition, the less alexithymic they were, the stronger was its activation (p < 0.05, Figure 2).

We additionally performed a conjunction analysis in order to identify regions the young and older adults shared during viewing the facial expressions and during reasoning itself. No shared regions were identified in relation to viewing the facial expressions. However, the young and older adults shared activation in the left DLFC during correct reasoning about high impact expressions, and in the left IFG during incorrect reasoning about high impact expressions. No common regions were found during correct or incorrect reasoningabout low impact emotional states.

Differential Emotional State Reasoning in Young and Older Adults: Evidence from Behavioral and Neuroimaging Data

Prochnow D* Steinhäuser L, Brunheim S and Seitz RJ

- Department of Neurology, Heinrich-Heine University Düsseldorf, Düsseldorf, Germany

*Address for Correspondence: Prochnow D, Department of Neurology, Heinrich-Heine University Düsseldorf, Moorenstr. 5, 40225 Düsseldorf, Germany, Tel: +49-211-8117889; Fax: +49-211-18485; E-mail: prochnowdenise@gmail.com

Citation: Prochnow D, Steinhäuser L, Brunheim S, Seitz RJ. Differential Emotional State Reasoning in Young and Older Adults: Evidence from Behavioral and Neuroimaging Data. J Neurol Psychol. 2014;2(1): 8.

Copyright © 2013 Prochnow D, et al. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Journal of Neurology and Psychology | ISSN: 2332-3469 | Volume: 2, Issue: 1

Submission: 09 December 2013 | Accepted: 20 January 2014 | Published: 25 January 2014

Reviewed & Approved by: Dr. Cecilia Cheng, Department of Psychology, University of Hong Kong, China

Abstract

The ability to infer the emotions, intentions, and beliefs of others has a self-protecting function in social life but declines with age. Little is known about the cerebral mechanisms underlying this impairment in older adults. We used functional magnetic resonance imaging (fMRI) to map the brain regions associated with an emotional state reasoning paradigm in which subjects were required to infer the emotion of a seen facial expression by choosing one out of four statements describing what might have happened to the depicted person. Behaviorally, empathic reasoning performance correlated inversely with age with the older subjects (42-61 years, n=12) being significantly worse than the young subjects (22-39 years, n=14) in the accuracy of empathic reasoning. FMRI showed that young and older adults recruited similar brain regions but at different time points during empathic reasoning. In the older adults, higher order control areas became engaged early during viewing the target facial expressions, while in the young adults these were first recruited when all necessary information for the decision was present. Our data suggest that older subjects employ an inefficient mechanism leading to impaired empathic reasoning.Keywords

Age-differences; Empathy; Theory of mind; FMRI; Deficit; MechanismInferring the intentions and emotions of others is fundamental in everyday social interactions. Body language and especially facial expressions have been ascribed key roles in understanding the mindset of other people by virtue of theory of mind (ToM) and empathy [1,2]. More than all other parts of the body, the human face can produce differentiated movement patterns in rapid succession, thereby providing a powerful tool for communicating social information [1]. However, not all facial cues are equally relevant or profitable for a person. In fact, people are endowed with the capacity to differentiate highly from less relevant social information which allows them securing their own well-being as well as saving cognitive resources [3]. Specifically, happy and angry facial expressions represent highly relevant social messages which have immediate implications on the behavior of the observer (high social impact expressions). On the contrary, sad or fearful expressions (low social impact expressions) tell a lot about the sender but their behavioral consequences are vague because they are vitally not essential for the observer [3,4].

There is accumulating evidence showing that the capacity of reasoning about the intentions or emotions of others declines with increasing age [5-7]. According to a recent meta-analysis this impairment affects all domains (cognitive and affective) and all modalities (verbal, visual-static, visual-dynamic), but there is heterogenic evidence regarding the role of other cognitive functions in explaining the deficits [5,7-9]. Interestingly, however, it was recently shown that the impairment in affective ToM found in older adults was restricted to topics of little relevance for them while they performed better than young adults under conditions of high relevance [10].

From a neuroimaging perspective, areas repeatedly implicated in empathy and ToM tasks are located in the anterior, inferior and medial prefrontal cortex [11-15]. The majority of these studies examined only young adults [12,13,15,16]. Recently, however, it was shown that young adults as compared to older adults exhibited stronger activation in a ToM-related region in the lateral medial prefrontal cortex when they were confronted with portraits of stigmatized people. High functioning older adults recruited more strongly inferior prefrontal areas that have been implicated in the control of emotional responses [17]. In addition, there is evidence for a correlation between ToM performance and white matter integrity in young and older adults [18].

Therefore, the goal of the present functional magnetic resonance imaging (fMRI) study was to identify the brain areas underlying the differences between young and older adults in emotional state reasoning. We hypothesized that older adults require more cognitive resources to manage emotional state reasoning as compared to young adults. Specifically, we expected the older adults to show more pronounced activity in lateral and medial prefrontal areas associated with empathy, affective ToM and cognitive control. Also, we hypothesized that young and older adults share regions associated with automatic bottom-up empathy-related processing in the human mirror neuron system (hMNS) like the inferior frontal cortex or the inferior parietal lobule [19]. We expected these regions to correlate with self-reported empathy and explicit facial affect recognition abilities [16,20]. In contrast, higher order top-down modulated areas such as the anterior prefrontal cortex (aPFC) [21], the anterior cingulate cortex (ACC)/paracingulate cortex [22,23] or the superior dorsomedial frontal cortex (SDMFC) [15] we expected to correlate with the subjects’ reasoning performance.

Materials and Methods

SubjectsOur sample comprised 26 adults. Using a similar approach as Richter and Kunzmann [10], two age groups were formed on the basis of the median of the continuous age variable resulting in fourteen young (mean age: 28.64 years, SD = 5.68, range: 22-39 years) and twelve older adults (mean age: 49.50 years, SD = 5.99, range: 42-61 years). Groups differed significantly in age (F = 82.86, p ≤ 0.001) but had comparable educational levels (young adults: 13.79 ± 2.75 years; older adults: 14.00 ± 3.30 years; F = 0.03, n. sig.), neutral face recognition abilities (Benton Facial Recognition Test, F = 0.15, n.sig.) [24], mood (Beck’s Depression Inventory, F = 2.12, n.sig.) [25], general emotional competence (Toronto Alexithymia Scale, F = 0.69, n.sig.) [26], self-reported empathy (Saarbrücker Persönlichkeitsfragebogen, available online, F = 0.57, n. sig.), and facial affect recognition abilities (Difficulty Controlled Emotion Recognition Test - DCERT, a self-programmed adaptation of the Ekman-60-Faces Test using the more recent and standardized Karolinska Directed Emotional Faces, F = 1.20, n.sig.) [27].

Stimulation

Facial expressions representing emotional states (Averaged Karolinska Directed Faces, [28]) of high (angry, happy) and low (sad, fearful) social impact were used as stimuli. The degree of emotional expression was adjusted to the difficulty of their identification as found in 61 healthy volunteers using Ekman and Friesen’s Pictures of Facial Affect [29,30]. Specifically, happiness as the only positive and easily recognizable emotion was presented at a degree of only 50% of the maximal expression, while fear as the most difficult expression was shown at 100%. Following a fixation cross (200 ms) the facial expressions were presented for 1400 ms. Face presentation was followed by the presentation of four sentences (7000 ms) describing situations that might have happened to the person (e.g. “She was threatened by someone.” for fear). Each situation was linked to one of four emotional states (happy, angry, sad, fearful) according to ratings of a test sample. Sentences were comparable in length and the order in which they appeared on screen was randomized. For data analysis, the time interval between the facial expressions and the sentences was jittered around the chosen repetition time (TR) of 2000 ms. Participants were instructed to imagine meeting the depicted person in an everyday situation and to select the described situation he/she most likely has experienced by pressing one out of four buttons.

In an additional control condition scrambled images of the facial expressions were shown for 1400 ms, followed after a jittered time interval by three unrelated sentences (e.g. “The door is open.”) and the target sentence “Press the button” in order to control for reading, motor and memory related activity. The paradigm consisted of 192 experimental condition trials with 48 repetitions of each facial expression and 48 control condition trials.

Procedure

Upon arrival, all participants were informed about the study and gave informed written consent to participate. Prior to scanning, they completed screening tests and questionnaires to check for the following exclusion criteria: signs of depression [25], impaired face recognition [24], a history of major mental illness, intake of psychotropic medication, and contraindications of scanning such as irremovable metals or implants, claustrophobia, visual disturbances not corrigible by MRI compatible glasses or pregnancy. Scanning itself was preceded by a training session with different emotional states in the scanner to familiarize the subjects with the experimental set-up. It was followed by behavioral testing assessing self-reported empathy [31], alexithymia [26], facial affect recognition (DCERT) and mind reading from photographs depicting only pairs of eyes (Eyes Test) [2]. The study was approved by the Ethics Committee of the Heinrich-Heine University Düsseldorf and was conducted according to the Declaration of Helsinki.

Imaging

Scanning was performed on a 3 T Siemens Trio TIM MRI scanner (Erlangen, Germany) using an EPI-GE sequence (TR = 2000 ms, TE = 30 ms, flip-angle = 90°, FOV = 192 x 192 x 112 mm3, acquisition matrix = 128 x 128 pixels). The whole brain was covered by 28 transversal slices oriented parallel to the bi-commissural plane (in-plane resolution = 1.5 mm x 1.5 mm, slice thickness = 4.0 mm, interslice gap = 0 mm, FOV = 256 x 256 x 192 mm3, acquisition matrix = 256 x 256 pixels). In each run, 1200 volumes were acquired. A 3D-T1-weighted MP-RAGE (magnetization prepared gradient echo) sequence (TR = 2300 ms, TE = 2.98 ms, flip angle = 90°) with high resolution consisting of 192 sagittal slices (in-plane resolution = 1 mm x 1 mm, slice thickness = 1 mm, interslice gap = 0 mm) was also acquired in each subject.

Data analysis

Behavioral data were analyzed using SPSS software (PASW, Predictive Analysis Software, version 20). Prior to analysis, all data were tested for normal distribution using Kolmogorov-Smirnov test. For comparison of means, single factor analyses of variance (ANOVA) were used. Correlation analyses were performed using Spearman coefficients. Imaging data were analyzed using the Brainvoyager QX software package (Brain Innovation, Maastricht, The Netherlands). In each subject, the 2-D slice time-course image data were co-registered with the volumetric 3-D Gradient Echo data sets from the same session. Functional images were spatially normalized and realigned to correct for head movements between scans. Preprocessing of the fMRI data included Gaussian spatial smoothing (FWHM = 6 mm) and temporal filtering as well as the removal of linear trends. Blood oxygenation level dependent (BOLDER) changes were analyzed in a rapid event-related model using a random effects group analysis based on a deconvolution general linear model (GLM). The following regressors were used to contrast conditions: baseline (scrambled facial expressions), face (face expressions with high and low selfrelevance), reasoning (subdivided into correct and false responses regarding highly and less relevant emotional states), and control (for motor and reading related activity). A threshold of p < 0.005 (uncorrected) combined with a dynamic cluster threshold calculated using the cluster threshold estimator plugin for Brainvoyager QX (http://www.brainvoyager.com/downloads/plugins_win/plugins_win.html) was applied to all data. For mapping the brain activation patterns related to the event decision, only correct answers were taken into consideration. Coordinates of the activation areas are given in Talairach space [32].

Results

The older adults performed as well as the young adults on the Eyes Test [2] (F = 2.39, n. sig.). Similarly, they performed equally well on the control condition (accuracy: F = 1.20, n. sig.; latency: F = 0.15, n.sig.) indicating that both groups were able to use the response buttons correctly. In contrast, the older subjects performed significantly worse than the young adults in the empathic reasoning paradigm (F = 6.33, p = 0.019). The differences in reasoning accuracy were significant for high impact expressions (F = 7.80, p = 0.01) but only a trend was observed for low impact expressions (F = 4.10, p = 0.054). There were no differences between the older and young adults in total response latency or the response latency for emotional states of high impact expressions (total: F = 0.00, n.sig.; high relevance: F = 1.50, n. sig.). However, the young adults responded faster when reasoning about low impact expressions was required (F = 5.32, p = 0.03). Their response latency was as fast as in the easier control condition, while young adults responded significantly faster in the control condition compared to the more difficult reasoning task (p = 0.002).Overall, empathic reasoning performance, especially based on facial expressions associated with a high degree of social impact, correlated with years of education (Total empathic reasoning: r = 0.46, p = 0.019; high impact empathic reasoning: r = 0.50, p = 0.009) but inversely with age (total empathic reasoning: r = -0.38, p = 0.052; high impact empathic reasoning: r = -0.43, p = 0.030). Additional multiple regression analysis using age (β = -0.41, p = 0.021) and education (β = 0.42, p = 0.021) as predictors explained 34 % of the variance in empathic reasoning performance (F = 5.89, p = 0.009, r2 = 0.34, corrected r2 = 0.28).

Further, total empathic reasoning performance correlated with facial affect recognition ability in the young adults (r = 0.63, p = 0.015), and self-reported empathy (r = 0.62, p = 0.033) in the older adults, while performance on the Eye’s Test did neither correlate with emotional competence/alexithymia, self-reported empathy or facial affect recognition ability in the young or older adults.

From a neural perspective, we were interested in mapping the brain activation patterns when the subjects were confronted with the emotional expressions that had to be empathically evaluated, and during emotional state reasoning when the subjects chose one of the given situations. During reasoning we mapped the brain regions separately for correct and incorrect conclusions, since we expected invalid conclusions to go along with stronger activations due to higher perceived difficulty and involvement of more cognitive resources. Specifically, we aimed at discovering differences in brain activation between young and older adults.

Viewing the facial expressions of high and low social impact as contrasted with the scrambled faces led to stronger activations only in the older adults (Figure 1). These included the anterior prefrontal cortex, dorsolateral and superior dorsomedial frontal cortex, inferior frontal cortex and anterior insula, temporal cortex, the temporo-parietal junction, pre-and postcentral gyri, and the inferior parietal lobule (Table 1, Figure S1). We defined regions of interest and calculated correlation coefficients between their mean β-value representing mean percent signal change and our behavioral data (Table S1). Activation in the left superior aPFC (-22/64/24) during viewing low impact expressions correlated inversely with performance on the empathic reasoning task in the older adults (p < 0.01). Activation of the left IPL (-52/-26/33) during confrontation with expressions of low social impact correlated inversely with total facial affect recognition abilities in the older adults (p < 0.05).

Figure 1: Selected regions of interest during viewing facial expressions. Activations of selected regions in transversal (z = 9; top left ) and coronar (y = 62; bottom left) view, as well as their degree of percent signal change (PSC) during the different events of interest in the young (light grey) and older (dark grey) adults at p < 0.005 in combination with a dynamic cluster thresholder (for further details see methods, top & middle right) and the inverse Spearman correlation between activation during viewing facial expressions of low social impact in the older adults and their total reasoning performance (bottom right).

Figure 2: Selected regions of interest during reasoning. Activations of selected regions in sagittal view (x = 4 top left; x = 2 top right), as well as their degree of percent signal change (PSC) during the different events of interest in the young (light grey) and older (dark grey) adults at p < 0.005 in combination with a dynamic cluster thresholder (for further details see methods, middle left & right).

Discussion

The novel finding of this combined behavioral and fMRI study was that older adults who showed impairments in empathic reasoning accuracy compared to young adults recruited similar empathy, ToM, and cognitive control related brain regions, but that there were significant differences in the neural time courses when these regions became engaged during the task.Our results support the notion of a decrease of affective theory of mind in higher adulthood [5-7]. This deficit was related to the complex emotional state reasoning task but was absent when the conditions were less multifaceted in the Eyes Test. Furthermore, self-reported empathy, general emotional competence and explicit facial affect recognition ability were unaffected by the deficit. However, empathic reasoning performance correlated with facial affect recognition ability in young adults, and with self-reported empathy in older adults. Our results exceed those of others [10] showing that the older adults’ impairment in emotional state reasoning accuracy affected both, high impact emotional states that were considered highly relevant for the observer, and by trend also low impact emotional states associated with less relevance for the observer. Notably, older adults responded significantly faster than young adults when inference of a low impact emotional state was required. Their response latency was similar to that during the much easier control task, suggesting a lower motivation to reason about the cause of emotional states of low social impact.

Owing to the assumption of stronger reliance on cognitive resources, we expected older adults to exhibit stronger activations in bottom-up modulated areas related to a basal empathic response, and in top-down modulated higher-order prefrontal areas associated with ToM. Indeed, older adults showed more pronounced activity in mirror neuron associated regions such as the IFG and IPL [13,15,16,33,34], empathy-related areas such as the anterior insula [14,15,35], higher order areas of cognitive control [21], decision-making [15,36,37] and ToM [16,21,38,39] during viewing facial expressions of either high or low social impact. During reasoning about these emotional states we observed stronger activations in a similar but higher-order area dominated network in young adults as compared to older adults.

The percent signal change based parameter estimates (β) showed that in young adults, higher order control areas and areas associated with ToM became downregulated early during viewing the facial expressions, but upregulated in older adults. During subsequent reasoning about the facial expressions, the opposite pattern with upregulated aPFC and ACC regions in young adults and downregulated aPFC and ACC in older adults was found. Correlation analyses showed that activation within different clusters of the aPFC was associated with low empathic reasoning performance, low performance on the Eyes Test and low explicit facial affect recognition ability in older adults but activation in left aPFC went along with high general emotional competence in young adults. These regions have been previously implicated in subordinate processes such as attention, working-memory and multitasking and ToM [21]. In addition, there is evidence from transcranial stimulation experiments suggesting that the left lateral aPFC is responsible for inhibiting regions relevant for automatic emotional processing and activating regions necessary for rule-driven behavior during reaction towards emotional facial expressions [40]. Similar results were found for the left and right ACC and SDMFC, areas which have been shown crucial for empathic valuation [15,41], social perception [39,42] and ToM [11,14,16]. While activation in this ToM-associated part of the brain was associated with good empathic reasoning performance, high facial affect recognition ability and a high degree of emotional competence in young adults, inverse correlations between activation of the ACC and performance on the Eyes Test and self-reported empathy were found in older adults.

These results suggest different mechanisms or strategies of dealing with the task in older as compared to young adults. Higher order control and ToM areas that became engaged early during confrontation with facial expression of emotion in older adults bound cognitive resources with the consequence that these resources were not been available during subsequent emotional state reasoning. Alternatively, early engagement of the aPFC during confrontation with the facial expressions as the basis for subsequent reasoning might have downregulated areas important for automatic emotional appraisal while upregulating higher order areas subserving ToM too early to ensure a goal-directed response [40]. Young adults, on the contrary, did not show any early aPFC or SDMFC engagement when pre-evaluating the facial expressions. The different timing of activation of regions associated with basal empathy [14,20], ToM [14], and cognitive control [21] might have led to a more efficient processing of the reasoning task in young adults, as reflected by the behavioral data.

Besides age-related differences in cerebral processing, we expected young and older adults to share important nodes within the empathy network. In fact, young and older adults recruited similar regions within the inferior frontal cortex (BA 47), anterior insula, ACC, premotor cortex, and IPL, even though there were striking differences in timing. Statistically, the left IFG (BA 45) corresponding roughly to Broca’s area [43] was the only region young and older adults shared during reasoning most likely related to covert speech during sentence reading.

In the present study, we were able to show that the differences between young and older adults were not based on the recruitment of completely different brain areas but that similar areas became engaged at different time points during the empathic reasoning paradigm. In future studies it would therefore be promising to combine a comparable design with electroencephalography in order to get insights in the temporal order of the cerebral processes during reasoning about emotional states.

The current study has limitations which should not go unmentioned. First, the sample sizes of the current study are relatively small. Compared to pure behavioral studies, fMRI studies are typically based on smaller samples due to more strict inclusion criteria and higher drop-out rates (e.g. movement artefacts during fMRI scanning). To compensate for the small sample size, we used a high number of repetitions per condition in order to ensure sufficient experimental power of the fMRI data. Second, in accordance with most other studies, we compared two age groups, namely young adults and a sample of older adults. Instead of two groups it would have been interesting to form three age groups in order to compare performance and brain activation patterns between young (20-38), middle-aged (40-59) and old adults (> 61 years) [44,45]. However, people of old age are not only difficult to recruit for brain imaging studies due to the strict inclusion criteria (e.g. no metals like pacemakers or other implants), they also are at risk to exhibit disease-related brain changes rather than purely age-related abnormalities which easily lead to confounding results. For this reason, we did not recruit old people for the current study. Our results can, therefore, only be generalized for young and older, e.g. equivalent to middle aged, adults.

Taken together we provide novel data that shed light on the underlying mechanisms explaining the differences in emotional state reasoning between young and older adults. Like young adults, older adults invested a reasonable amount of time into the inference of emotional states of high social impact, but appeared to hastily respond to low impact emotional states. This probably reflected an inefficient strategy to use cognitive resources. While basal empathic and higher order control and ToM areas became engaged early in older adults, young adults recruited a higher order dominated network at a later time point during the reasoning paradigm. We conclude that binding of cognitive resources necessary for reasoning processes and aPFC mediated too early engagement of higher order ToM areas might explain the reduced efficiency and accuracy of older adults.

References

- Ekman P, Friesen WV (1969) Nonverbal leakage and clues to deception. Psychiatry 32: 88-106.

- Baron-Cohen S, Wheelwright S, Hill J, Raste Y, Plumb I (2001) The “Reading the Mind in the Eyes” Test revised version: a study with normal adults, and adults with Asperger syndrome or high-functioning autism. J Child Psychol Psychiatry 42: 241-251.

- Rohr M, Degner J, Wentura D (2012) Masked emotional priming beyond global valence activations. Cogn Emot 26: 224-244.

- Ellsworth PC, Scherer KR (2003) Appraisal processes in emotion. In R. J. Davidson, H. Goldsmith, & K. R. Scherer (Eds.), Handbook of Affective Sciences. New York and Oxford: Oxford University Press.

- Maylor EA, Moulson JM, Muncer AM, Taylor LA (2002) Does performance on theory of mind tasks decline in older age? Br J Psychol 93: 465-485.

- Slessor G, Phillips LH, Bull R (2007) Exploring the specificity of age-related differences in theory of mind tasks. Psychol Aging 22: 639-643.

- Henry JD, Phillips LH, Ruffman T, Bailey PE (2013) A meta-analytic review of age differences in theory of mind. Psychol Aging 28: 826-839.

- Keightley ML, Winocur G, Burianova H, Hongwanishkul D, Grady CL (2006) Age effects on social cognition: faces tell a different story. Psychol Aging 21: 558-572.

- Li X, Wang K, Wang F, Tao Q, Xie Y, et al. (2013) Aging of theory of mind: The influence of educational level and cognitive processing. Int J Psychol 48: 715-727.

- Richter D, Kunzmann U (2011) Age differences in three facets of empathy: performance-based evidence. Psychol Aging 26: 60-70.

- Vogeley K, Bussfeld P, Newen A, Herrmann S, Happé F, et al. (2001) Mind reading: neural mechanisms of theory of mind and self-perspective. Neuroimage 14: 170-181.

- Carr L, Iacoboni M, Dubeau MC, Mazziotta JC, Lenzi GL (2003) Neural mechanisms of empathy in humans: a relay from neural systems for imitation to limbic areas. Proc Natl Acad Sci U S A 100: 5497-5502.

- Seitz RJ, Schäfer R, Scherfeld D, Friederichs S, Popp K, et al. (2008) Valuating other people’s emotional face expression: a combined functional magnetic resonance imaging and electroencephalography study. Neuroscience 152: 713-722.

- Fan Y, Duncan NW, de Greck M, Nothoff G (2011) Is there a core neural network in empathy? An fMRI based quantitative meta-analysis. Neurosci Biobehav Rev 35: 903-911.

- Prochnow D, Hüing B, Kleiser R, Lindenberg R, Wittsack HJ, et al. (2013) The neural correlates of affect reading: an fMRI study on faces and gestures. Behav Brain Res 237: 270-277.

- Hooker CI, Verosky SC, Germine LT, Knight RT, D’Esposito M (2008) Mentalizing about emotion and its relationship to empathy. Soc Cogn Affect Neurosci 3: 204-217.

- Krendl AC, Heatherton TF, Kensinger EA (2009) Aging minds and twisting attitudes: an fMRI investigation of age differences in inhibiting prejudice. Psychol Aging 24: 530-541.

- Charlton RA, Barrick TR, Markus HS, Morris RG (2009) Theory of mind associations with other cognitive functions and brain imaging in normal aging. Psychol Aging 24: 338-348.

- Jankowiak-Siuda K, Rymarczyk K, Grabowska A (2011) How we empathize with others: a neurobiological perspective. Med Sci Monit 17: RA 18-24.

- Lamm C, Batson CD, Decety J (2007) The neural substrate of human empathy: effects of perspective-taking and cognitive appraisal. J Cogn Neurosci 19: 42-58.

- Gilbert SJ, Spengler S, Simons JS, Steele JD, Lawrie SM, et al. (2006) Functional specialization within rostral prefrontal cortex (area 10): a meta-analysis. J Cogn Neurosci 18: 932-948.

- Bush G, Luu P, Posner MI (2000) Cognitive and emotional influences in anterior cingulate cortex. Trends Cogn Sci 4: 215-222.

- Fornito A, Whittle S, Wood SJ, Velakoulis D, Pantelis C, et al. (2006) The influence of sulcal variability on morphometry of the human anterior cingulate and paracingulate cortex. Neuroimage 33: 843-854.

- Benton AL, Sivan AB, Hamsher K, Varney NR, Spreen O (1994) Contributions to neuropsychological assessment. Oxford University Press, New York.

- Hautzinger M, Bailer M, Worall H, Keller F (1994) Beck Depressions-Inventar (BDI). Testhandbuch, Huber, Bern.

- Bagby RM, Parker JD, Taylor GJ (1994) The twenty-item Toronto Alexithymia Scale--I. Item selection and cross-validation of the factor structure. J Psychosom Res 38: 23-32.

- Lundqvist D, Flykt A, Öhman A (1998) The Karolinska Directed Emotional Faces - KDEF, CD ROM from Department of Clinical Neuroscience, Psychology section, Karolinska Institutet, ISBN 91-630-7164-9.

- Lundqvist D, Litton JE (1998) The Averaged Karolinska directed emotional faces – AKDEF, CD ROM from Department of Clinical Neuroscience Psychology Section. Karolinska Institutet 1998, ISBN 91-630-7164-9.

- Ekman P, Friesen WV (1976) Pictures of facial affect. Consulting Psychologists Press, Palo Alto.

- Prochnow D, Donell J, Schäfer R, Jörgens S, Hartung HP, et al. (2011) Alexithymia and impaired facial affect recognition in multiple sclerosis. J Neurol 258: 1683-1688.

- Davis MH (1980) A multidimensional approach to individual differences in empathy. JSAS Catalog of Selected Documents in Psychology 10: 85.

- Talairach J, Tournoux P (1988) Co-planar stereotaxic atlas of the human brain. Thieme, New York.

- Rizzolatti G, Craighero L (2004) The mirror-neuron system. Annu Rev Neurosci 27: 169-192.

- Schulte-Rüther M, Markowitsch HJ, Fink GR, Piefke M (2007) Mirror neuron and theory of mind mechanisms involved in face-to-face interactions: a functional magnetic resonance imaging approach to empathy. J Cogn Neurosci 19: 1354-1372.

- Hynes CA, Baird AA, Grafton ST (2006) Differential role of the orbital frontal lobe in emotional versus cognitive perspective-taking. Neuropsychologia 44: 374-383.

- Krain AL, Wilson AM, Arbuckle R, Castellanos FX, Milham MP (2006) Distinct neural mechanisms of risk and ambiguity: a meta-analysis of decision-making. Neuroimage 32: 477-484.

- Hall J, Whalley HC, McKirdy JW, Sprengelmeyer R, Santos IM, et al. (2010) A common neural system mediating two different forms of social judgement. Psychol Med 40: 1183-1192.

- Gallagher HL, Happé F, Brunswick N, Fletcher PC, Frith U, et al. (2000) Reading the mind in cartoons and stories: an fMRI study of ‘theory of mind’ in verbal and nonverbal tasks. Neuropsychologia 38: 11-21.

- Lawrence EJ, Shaw P, Giampietro VP, Surguladze S, Brammer MJ, et al. (2006) The role of ‘shared representations’ in social perception and empathy: an fMRI study. Neuroimage 29: 1173-1184.

- Volman I, Roelofs K, Koch S, Verhagen L, Toni I (2011) Anterior prefrontal cortex inhibition impairs control over social emotional actions. Curr Biol 21: 1766-1770.

- Seitz RJ, Nickel J, Azari NP (2006) Functional modularity of the medial prefrontal cortex: involvement in human empathy. Neuropsychology 20: 743-751.

- Krämer UM, Mohammadi B, Donamayor N, Samii A, Münte FF (2010) Emotional and cognitive aspects of empathy and their relation to social cognition--an fMRI study. Brain Res 1311: 110-120.

- Lindenberg R, Fangerau H, Seitz RJ (2007) “Broca’s area” as a collective term? Brain Lang 102: 22-29.

- Isaacowitz DM, Löckenhoff CE, Lane RD, Wright R, Sechrest L, et al. (2007) Age differences in recognition of emotion in lexical stimuli and facial expressions. Psychol Aging 22: 147-159.

- MacPherson SE, Phillips LH, Della Sala S (2002) Age, executive function, and social decision making: a dorsolateral prefrontal theory of cognitive aging. Psychol Aging 17: 598-609.